By Rob Albritton, Senior Director, AI Center of Excellence and Jonathan Cotugno, Senior Machine Learning Engineer

Though it may feel surreal or unreal, the future of autonomous systems is here, and it has come to us through how we have taught computers to see – in 3D, through the process of semantic image segmentation.

To understand how this mission critical technology is being used, let’s look at segmentation, the various processes and terms associated with it, and how these contribute to 3D semantic segmentation being harnessed in emerging technology. Then let’s look at how Octo’s oLabs is diving into 3D semantic segmentation of point clouds to help customers meet their missions.

A quick guide to 3D semantic image segmentation

In digital image processing and computer vision, image segmentation is the partitioning of a digital image into multiple segments to simplify the representation of that image by changing it into something more meaningful and easier for a computer to analyze.

However, in semantic segmentation, pixels are linked to an image and given a class label to provide context. The class labels can be anything – a car, person, house, etc.

Let’s say we classify a set of pixels as a car. Through semantic segmentation, computer vision software will label all the objects as car-related objects. Semantic segmentation can then give us context of a scene, from which we can explicitly derive the shape, area, or perimeter of an object.

Now it is time to count occurrences of the objects. This is done through instance segmentation. Instance segmentation software can label the separate occasions or instances in which an object appears in an image. For example, the user might need the image processor to count the number of trees on a street or cars on a highway.

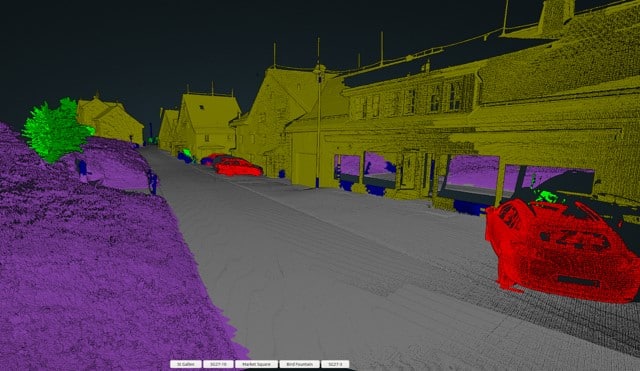

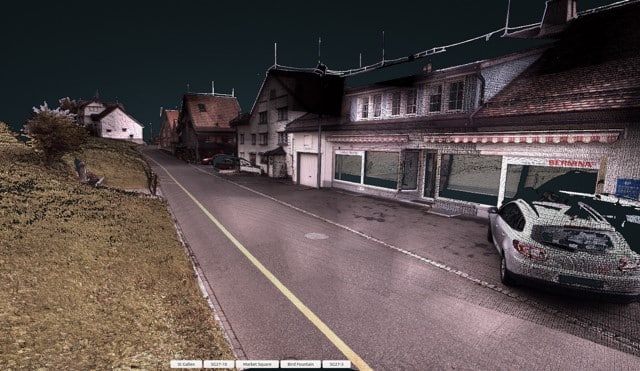

Finally, there are point clouds – a set of data points commonly produced by marking the external surfaces of objects around 3D semantic segmentation of point of clouds.is used for 3D visualization, producing measurements and autonomous scene understanding through machine and deep learning.

Semantically segmented point cloud

Point cloud

3D semantic image segmentation takes the idea of image segmentation to the next level by extracting data in three-dimensional format used by autonomous systems that help government agencies, defense organizations, health care institutions, and civilian entities perform myriad tasks that would have been impossible in the past. 3D semantic image segmentation can provide a robotic system, a mobile device, or an autonomous vehicle instant contextual information of the entire 3D scene around the sensor. This is incredibly powerful information for a wealth of applications using autonomous systems to gain data for meeting life-or-death missions.

Real-world 3D semantic image segmentation

While computer vision and deep learning have been rapidly evolving on the software front, sensors for autonomous vehicles, robotics, and mobile devices have been developing at the same pace. 3D semantic image segmentation is being used to extract and interpret data in a variety of areas.

Autonomous vehicles – 3D semantic image segmentation is used to help cars understand the scene they are in. For example, the segmentation algorithm can identify curbs, sidewalks, and other objects the car should avoid.

Medical applications – Major research is being conducted in the medical community to harness 3D semantic image segmentation and the data that comes with it. For example, tomography (x-rays, ultrasound, etc.) uses 3D data for tumor and cancer detection, diagnosing, and more.

Disaster cleanup – Debris estimation is another area in which 3D semantic image segmentation is beneficial: after a natural disaster, it can help governments identify the extent of damage, from which they can calculate the costs associated with the damage.

Search and rescue – 3D semantic image segmentation can help emergency responders rapidly identify possible points of entry into rubble after a disaster. For example, the 7.0 magnitude earthquake that struck Haiti in 2010 produced devastating debris. Had 3D semantic image segmentation been available at the time, more lives could have been saved by rescue teams leveraging 3D visibility and data by looking into rubble to pinpoint hazards, people, and animals.

Defense applications of 3D semantic segmentation

Especially given the vast amount of data produced on the battlefield and at the tactical edge, defense organizations have much to gain by adopting technology driven by 3D semantic image segmentation.

Mission planning – Innovative defense agencies and organizations are utilizing 3D semantic image segmentation as a mission planning tool to identify ingress/egress points of buildings that close combat forces plan to enter. Additionally, it can be used to identify obstacles and threats along dismounted (on foot) routes.

Training – When it comes to training with augmented reality and virtual reality (AR/VR), 3D semantic image segmentation allows soldiers to identify objects of interest and then turn them off or on in AR or VR scenes. For example, a user may classify all cars in a point cloud with semantic segmentation and then run through one virtual scenario with cars in the scene and another with all the cars removed from the scene.

Situational awareness – This technology can be used to bolster soldier situational awareness, rapidly providing enhanced near real-time situational awareness with change and object detection. For example, soldiers can identify when the environment has been altered during a specified period of time on a particular street. Perhaps compared to a first viewing the road has been repaved, or there is a dirt mount where an IED has been buried. Using 3D semantic image segmentation, troops can identify such changes.

Close combat – 3D semantic segmentation holds much promise for close combat and small team tactical applications. Leveraging emerging 3D semantic image segmentation models, teams can rapidly identify buildings, trees, vehicles, or people seen in light detection and ranging (LiDAR) or photogrammetric point clouds. Imagine a semantically segmented point cloud of an urban scene. Benefiting from 3D semantic image segmentation, the soldier or operator will know how many buildings there are and the surface area of those buildings, how many vehicles there are and their sizes, where roads are, and how many feet of road extend from one building to the next.

3D semantic image segmentation at oLabs

At oLabs, we are diving head first into the world of 3D semantic image segmentation. After performing exhaustive research and testing, we have developed robust methods for 3D semantic segmentation of point of clouds derived from a variety of sources and with an increasing list of classes that can then be used by autonomous systems.

Octo’s oLabs team is actively leveraging 3D semantic image segmentation to rapidly identify building ingress and egress points (for example, windows and doors). We have methods to semantically segment point clouds from classes including cars, buildings, high and low vegetation, and natural and human-made terrain. And that is just the start of how agencies we work with benefit from our research and development.

If your Federal Government organization would like to learn more about using 3D semantic image segmentation and what we’re doing at oLabs, reach out to a member of Team Octo. Contact us to schedule a detailed discussion.